IOG Architecture

IOG Cluster

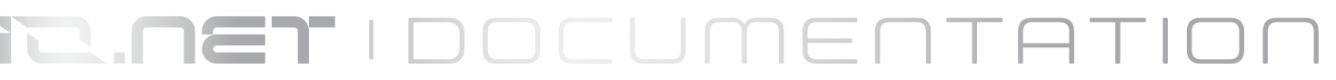

The IOG cluster, hosted on IO Cloud, functions akin to a regular Ray Cluster with IOG-specific modifications. Comprising a Head Node and multiple Worker Nodes, the Head Node orchestrates workloads among these nodes. Currently, the head node poses a single point of failure; if it becomes inaccessible, the entire cluster follows suit. However, in the event of worker node failure, the head node marks them as inaccessible and, with proper retry logic, can reschedule workload computations on healthy nodes.

To address the single point of failure issue, the head nodes of current clusters booked on io.net will be distributed across several secure partner data center facilities.

Application

There are two methods for executing computations on the cluster. The recommended approach involves running a driver application directly on the cluster's head node, either by editing the program there or via the Ray Job submission API. Alternatively, utilizing the Ray client library maintains an interactive connection, enabling applications external to the cluster to communicate with it using Ray's native API.

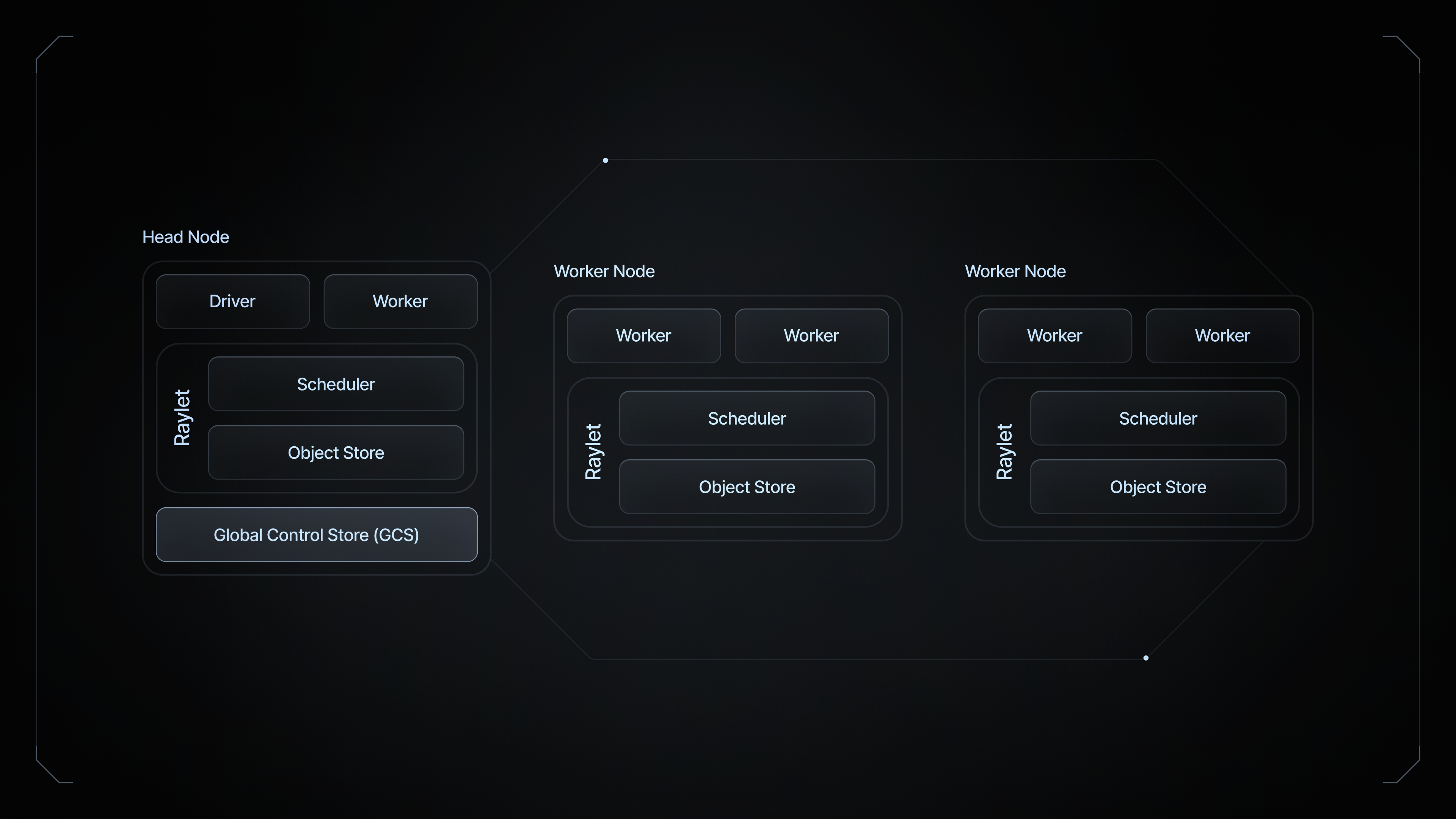

The primary section of the driver program operates on the head node of the Ray cluster, while all other components marked with @ray.remote syntax, which converts functions to tasks and classes to actors, are distributed across the entire cluster.

The Ray cluster serves as a distributed collection of machines. Most user activities occur on the head node, which abstracts GPU resources via Ray APIs rather than exposing them at the OS level. Instead of relying on tools like nvidia-smi, users can utilize the ray status command to view the full list of available resources on the cluster.

Below is an example of a regular Python function for calculating Fibonacci:

Fibonacci

def plus_1_local(base):

return base + 1

print(plus_1_local(10)) # Output: 11

And an implementation offloading the calculation to one of the resources on the cluster:

Python

import os

import time

import ray

@ray.remote

def plus_1_distributed(base):

return base + 1

# The function is executed remotely, and the result is returned as a reference.

result_ref = plus_1_distributed.remote(10)

# Retrieve the underlying object from the reference and print.

print(ray.get(result_ref)) # Output: 11

Differentiate Ray-Compatible Applications

Three main differences exist between a standard application and a Ray-compatible one:

- The

@ray.remoteannotation marks the function or actor as a remote execution candidate. - The function is explicitly executed as a remote function via the

.remote()syntax. - For the main function to access the underlying object, it must retrieve the object from the object references. Other tasks or actors do not need to explicitly retrieve the object from a reference.

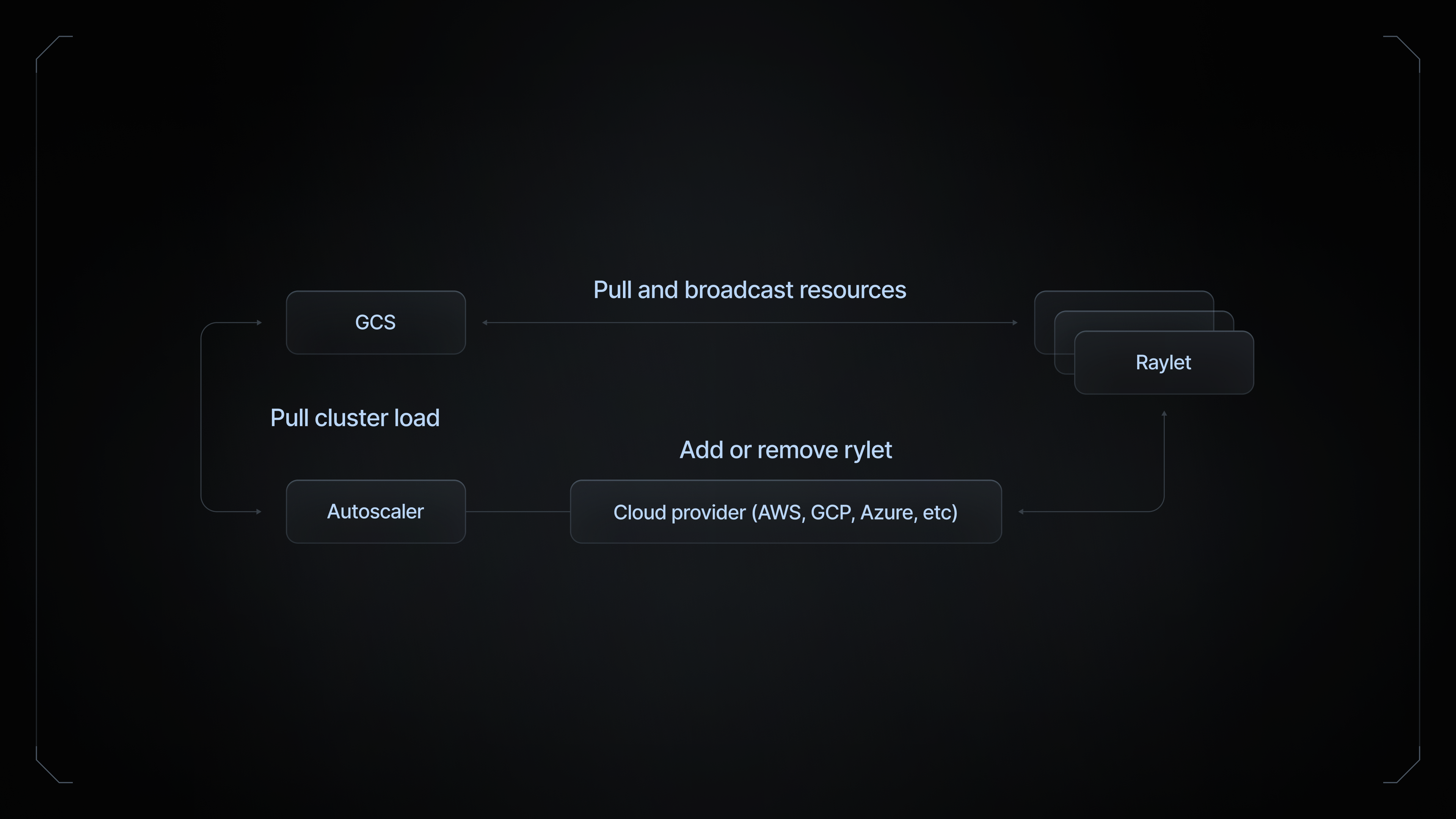

Presently, Ray clusters powered by IOG lack autoscaling or failed node replacement features due to two primary reasons:

- Nodes on IOG are located in different network locations. A private mesh network is established per cluster during instantiation, preventing nodes from joining the network once formed. In later stages, persistent virtual private cloud networks (VPCs) will be allowed.

- The current Ray autoscaler is not budget-aware. Cloud API requests for additional computational resources operate in post-paid mode, while resource reservation on IO Cloud is prepaid upfront. Efforts are underway to implement such a feature in IOG's Ray implementation.

Interacting with the Cluster

For an IOG Cluster powered by Ray, interaction occurs through several services deployed on the head node. Access methods include:

- VS Code Remote or Jupyter Notebook: Remote code editing functions are provided via either Jupyter notebook or VS Code. Users can directly program applications on the cluster's head node and distribute computation tasks interactively.

- Ray Jobs API (currently disabled due to ongoing Shadow Ray attacks): Users can submit Ray Jobs, packaged offloads sent to be executed on the target cluster.

- Ray Client Interaction (currently disabled due to ongoing Shadow Ray attacks): An interactive method for applications to communicate with the cluster using the native Ray API (tasks and actors). However, if the connection is interrupted, tasks or actors (unless executed with a detached lifecycle) will terminate immediately.

Updated about 1 year ago